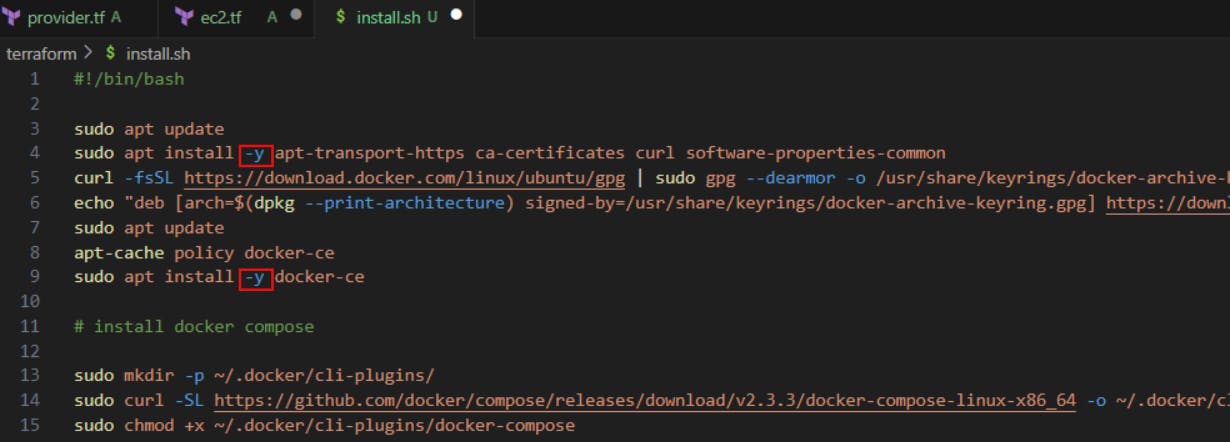

In the install.sh file we mentioned sudo apt install and in the middle, it asks for the confirmation of either yes or no, that is where the script was getting struck, so to bypass that we used -y

we only deleted elastic ip and ec2-instance previously, Okay now let's get this task start

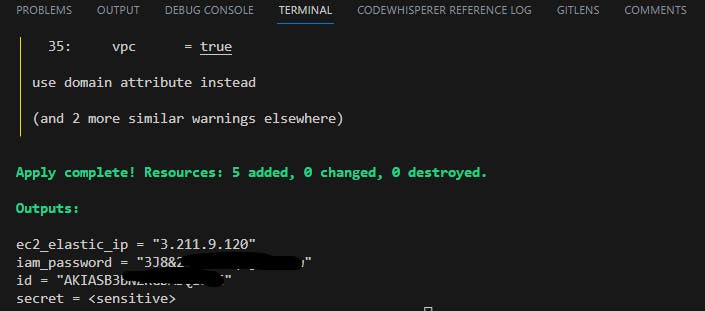

do terraform init, then terraform plan and then terraform apply --auto-approve

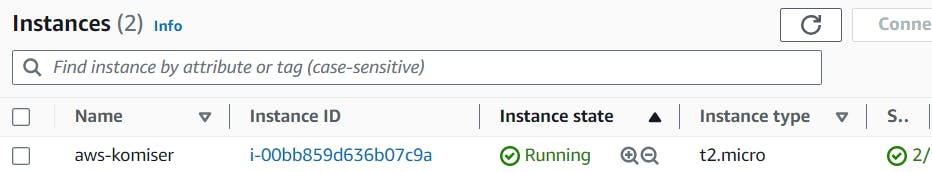

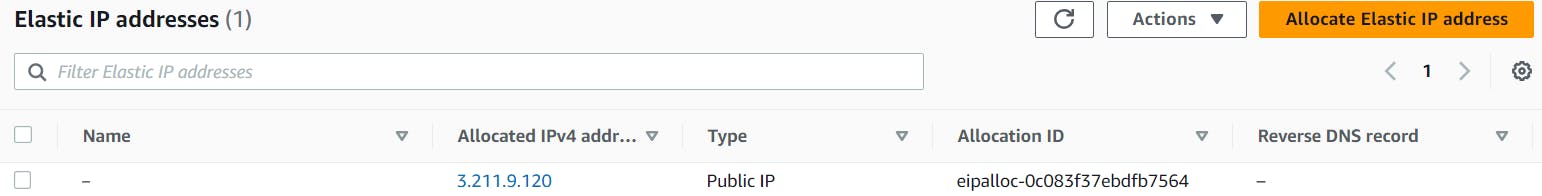

You people can see the Ec2-instance running with the ec2_elastic_ip matched with the above pic

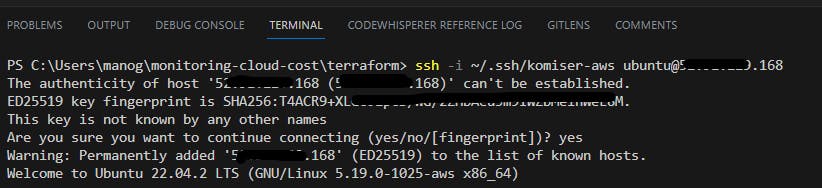

Now let's do the ssh

ssh -i ~/.shh/komiser-aws ubuntu@3.211.9.120

Now let's check whether docker is running or not

sudo systemctl status docker

docker compose also should be installed

docker compose version

Now we have to configure the elastic load balancer to setup our incoming traffic and distribute it according to the load it gets, But if you see it's just a basic Django app so it doesn't need an elastic load balancer but if we talk about a production use case, setting up an elastic load balancer is you need, so keeping that in mind we're provisioning elastic load balancer as well.

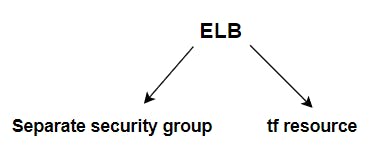

These are the two things that we need for the ELB to work

Separate security group:

To configure the separate security group for the elastic load balancer is needed to specifically configure the settings of the firewall for the incoming traffic and the outgoing traffic for help so we need to create an additional security group for that.

so first let's create the security group and then we will create terraform resource, we will link these two and we will also let our elastic load balancer know what all instances are running inside

📍tf resource reference

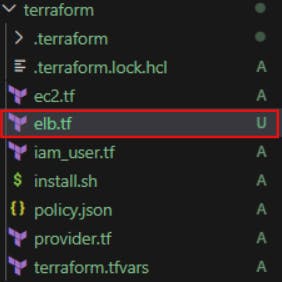

created elb.tf file

Two things we want our elastic load balancer todo

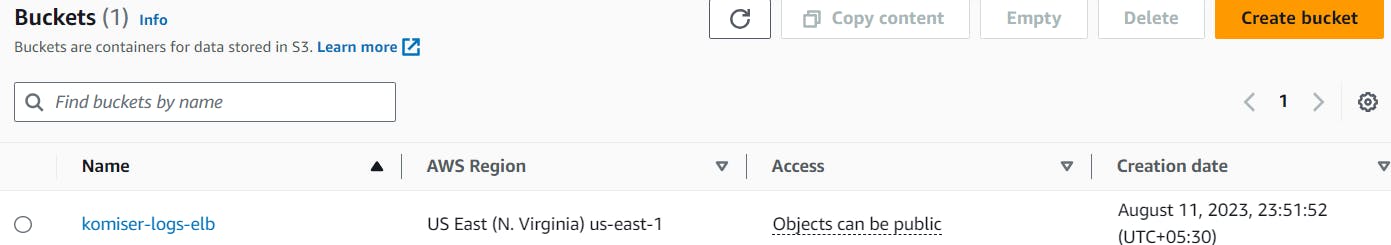

we want the ingress (in-coming) of ELB to listen to port 8000, and egrees (out-going traffic) would be everywhere on the internet. Now let's create s3 bucket with default settings

# Resources for the ELB

resource "aws_security_group" "komiser_elb_sg" {

name = "komiser-elb"

description = "komiser ELB Security Group"

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

tags = {

Name = "aws-komiser"

}

}

# Create a new load balancer

resource "aws_elb" "komiser_elb" {

name = "komiser_elb"

availability_zones = ["us-east-1a","us-east-1b","us-east-1c"]

security_groups = [aws_security_group.komiser_elb_sg.id]

instances = [aws_instance.komiser_instance.id]

access_logs {

bucket = "komiser-logs-elb"

interval = 5

}

listener {

instance_port = 8000

instance_protocol = "http"

lb_port = 80

lb_protocol = "http"

}

health_check {

healthy_threshold = 2

unhealthy_threshold = 2

timeout = 3

target = "TCP:8000"

interval = 30

}

cross_zone_load_balancing = true

idle_timeout = 400

connection_draining = true

connection_draining_timeout = 400

tags = {

Name = "aws-komiser"

}

}

This is the elb.tf file 👆

📍Resource for ELB

Now do

▪️terraform plan and then

▪️terraform apply --auto-approve

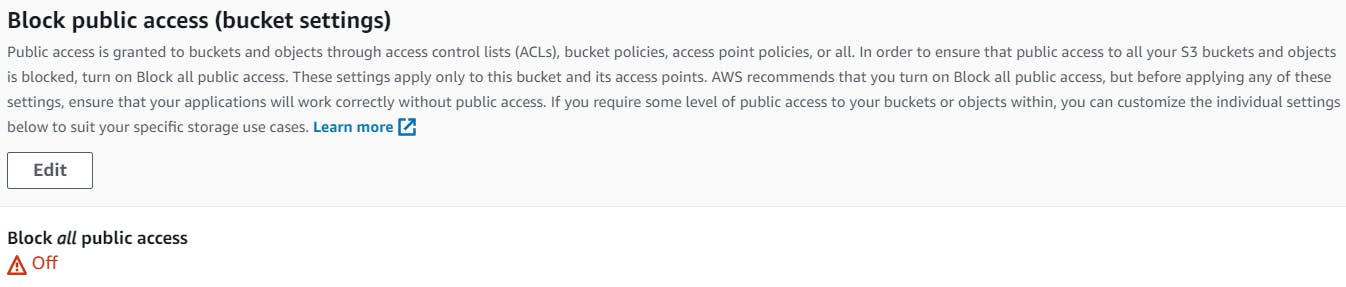

terraform apply has thrown some errors , just turnoff Block public access

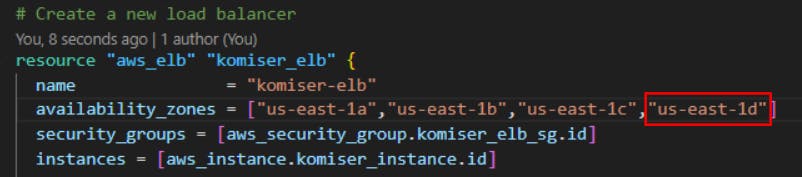

also we did some more changes to the elb.tf file

# Resources for the ELB

resource "aws_security_group" "komiser_elb_sg" {

name = "komiser-elb"

description = "komiser ELB Security Group"

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

tags = {

Name = "aws-komiser"

}

}

data "aws_elb_service_account" "main" {}

data "aws_iam_policy_document" "allow_elb_logging" {

statement {

effect = "Allow"

principals {

type = "AWS"

identifiers = [data.aws_elb_service_account.main.arn]

}

actions = ["s3:PutObject"]

resources = ["arn:aws:s3:::komiser-logs-elb/*"]

}

}

resource "aws_s3_bucket_policy" "allow_elb_logging" {

bucket = "komiser-logs-elb"

policy = data.aws_iam_policy_document.allow_elb_logging.json

}

# Create a new load balancer

resource "aws_elb" "komiser_elb" {

name = "komiser_elb"

availability_zones = ["us-east-1a","us-east-1b","us-east-1c"]

security_groups = [aws_security_group.komiser_elb_sg.id]

instances = [aws_instance.komiser_instance.id]

access_logs {

bucket = "komiser-logs-elb"

interval = 5

}

listener {

instance_port = 8000

instance_protocol = "http"

lb_port = 80

lb_protocol = "http"

}

health_check {

healthy_threshold = 2

unhealthy_threshold = 2

timeout = 3

target = "TCP:8000"

interval = 30

}

cross_zone_load_balancing = true

idle_timeout = 400

connection_draining = true

connection_draining_timeout = 400

tags = {

Name = "aws-komiser"

}

}

Now just do

▪️terraform plan

▪️terraform apply --auto-approve

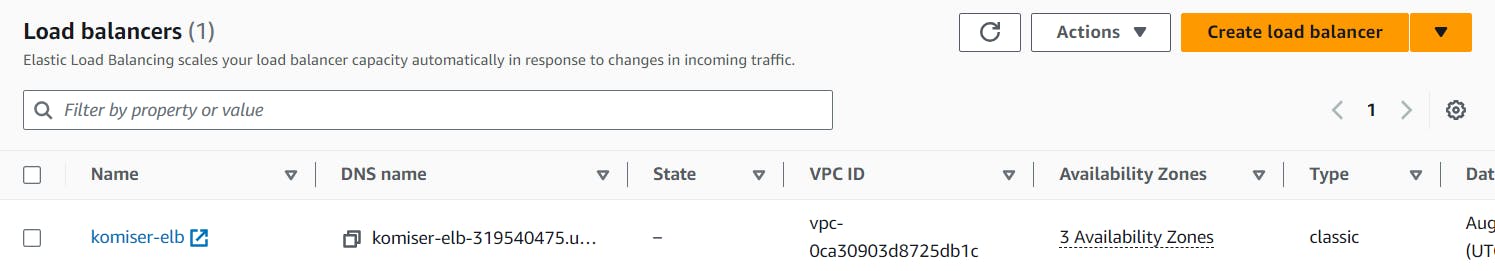

well, Now that the load balancer is been created

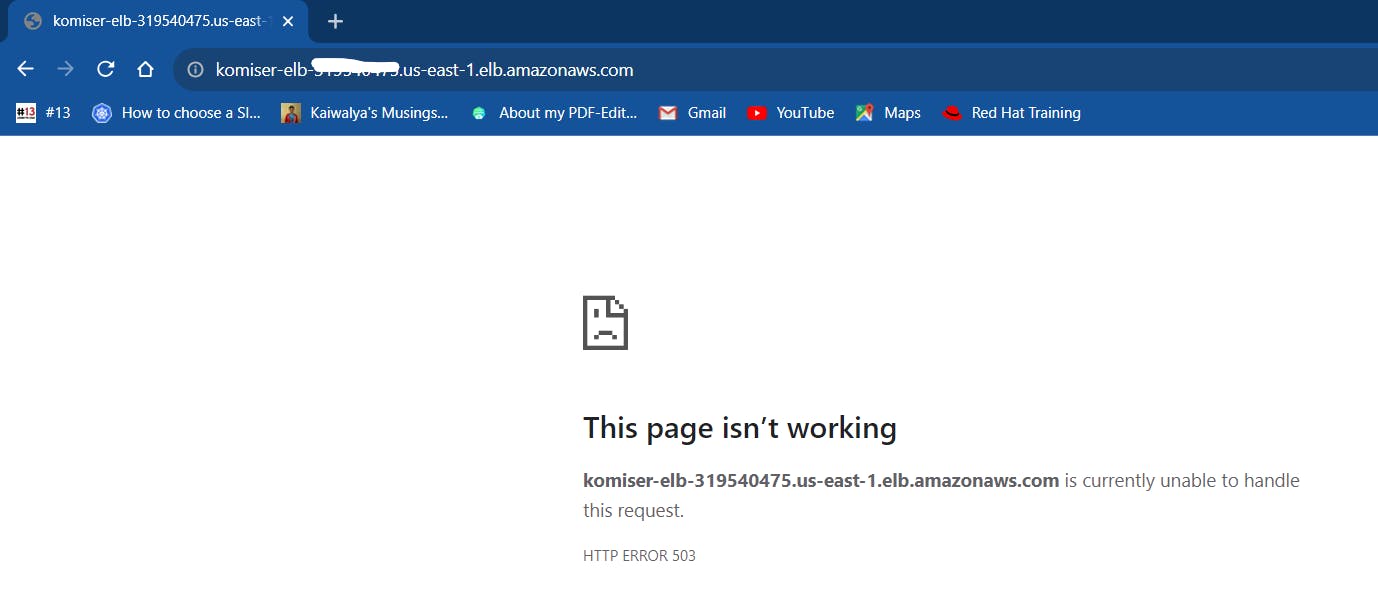

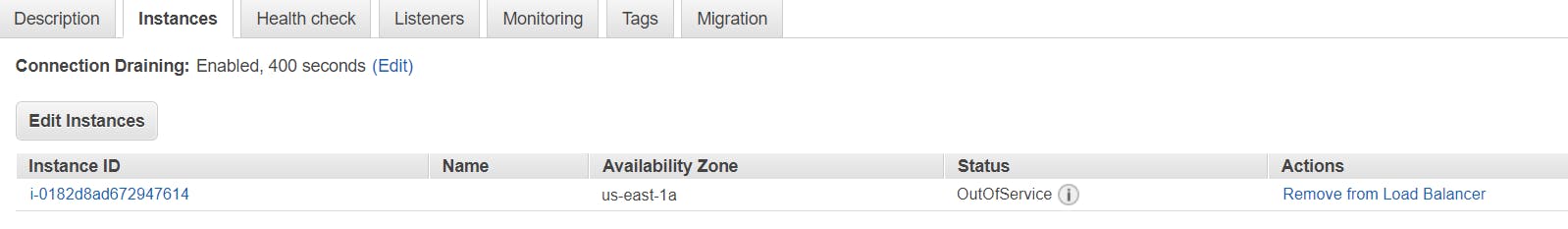

as you can see this page isn't working and when I checked in the instance 👇 it's showing OutOfservice

and hit the DNS name again and probably no change

If the application is not running inside the ec2-instance, how would we be able to access it using DNS, it makes sense right?

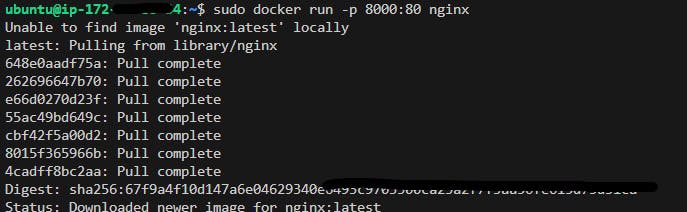

we're trying to manually run the application 👇

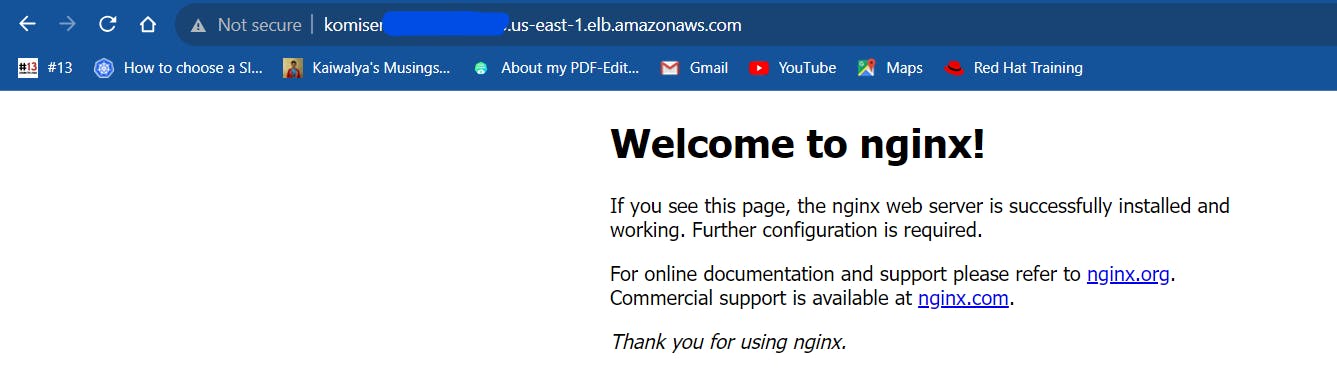

hurray Dns Is working

so finally Our configuration of the elastic load balancer and its working is successful

In the next stream, we will learn Ansible and deploy our application

📍**Resources**:

Part2 Blog :

manogna.hashnode.dev/cloud-cost-monitoring-..

Part4 Blog :

https://hashnode.com/edit/cll85u29z000109mmge6346ap

Kubesimplify Github :

github.com/kubesimplify/cloudnative-lab

My Github :