- name: AWS EC2 Komiser Playbook

hosts: vm01

tasks:

- name: Check if Docker is running!

ansible.builtin.systemd:

name: docker.service

state: started

enabled: true

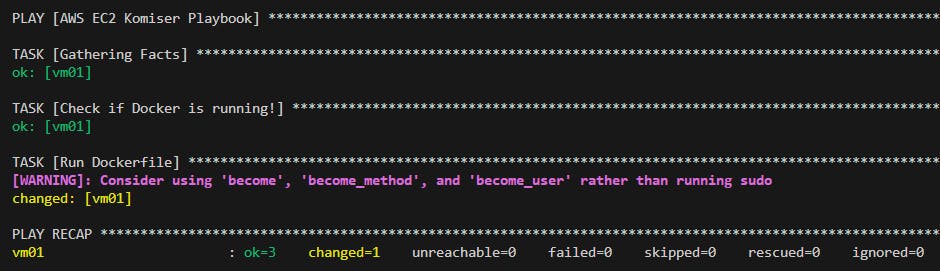

- name: Run Dockerfile

ansible.builtin.command:

args:

chdir: /cloudnative-lab/projects/ep-cloud-cost-monitoring/project_files

cmd: sudo docker compose -f docker-compose.yml up -d

The only thing we added here is the sudo 👆, This is the file of playbook.yaml after changes

We can do it in the other way also here you go 👇

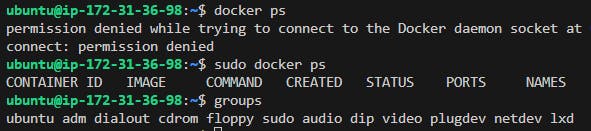

If you do docker ps, it will give permission denied because it's not under the sudo group, if you sudo docker ps the command works, so one thing is to use sudo but if you don't want to add sudo, you can add a docker to the sudo user group

Do ansible-playbook -i inventory.yaml playbook.yaml and the output is a success 👇

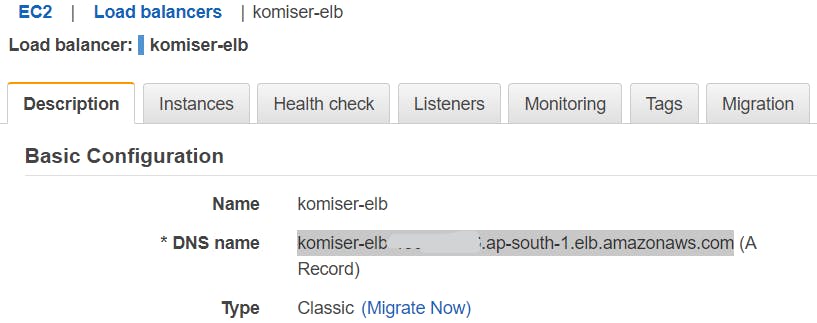

Now Go to the load balancer

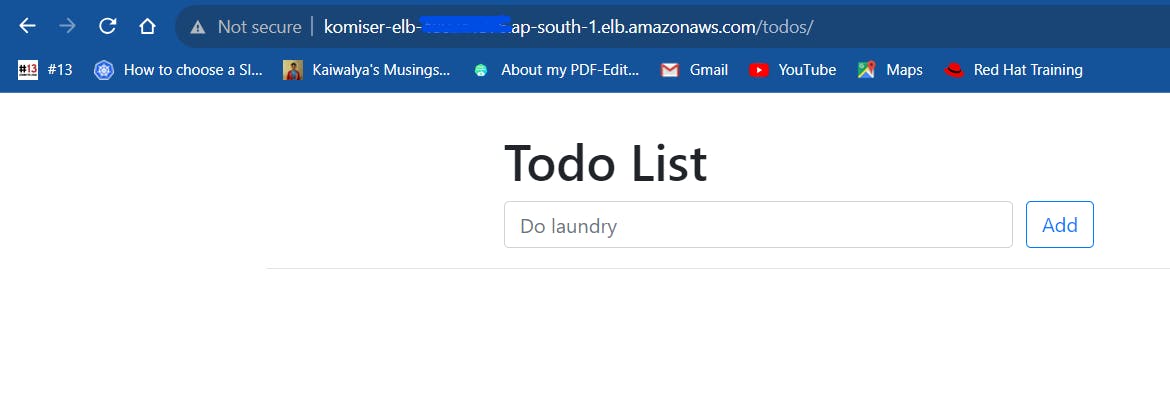

open the DNS name and it's working 👇

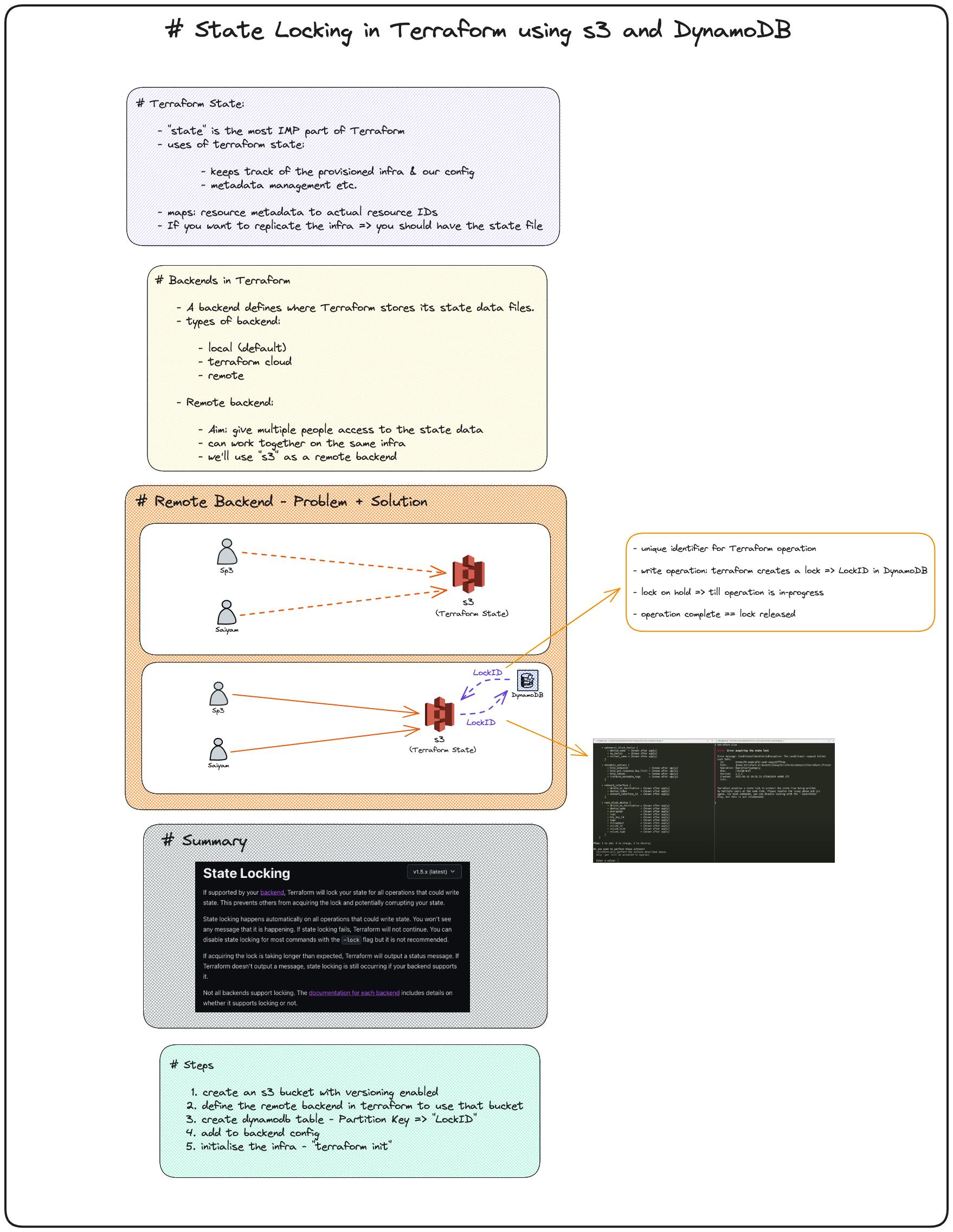

Now let's learn about terraform state locking

Now we will be talking about state files concerning the above image

Terraform State:

the state is the most important part of terraform, where everything is stored in your infrastructure which is provisioned right now and what is written in the configuration file, tf.state file is what it stores everything inside it

metadata refers to the id's and the passwords

Backends in Terraform

It defines the location where you want to store terraform.state file

local backend stores the terraform file in the disk by default -terraform cloud, you can connect your terraform configuration to the terraform cloud

remote, If we want to use some third-party service for example like if we want to store the state file in the s3 bucket then we can use remote backend

In the production environment the passwords are stored in the vault so even if people access state data, fil the passwords are kept safe

Remote Backend - Problem + Solution

sp3 and Saiyam who are working together on the AWS infrastructure in a company, have already stored our terraform state file in the backend in the s3 bucket, let's suppose sp3 has provisioned an Ec2 instance in his system, sp3 is constantly requesting write operations in the state file

we have seen this when we do terraform plan it generates a plan and when we do terraform apply it writes everything to the state file and then your resources would be provisioned so sp3 created an ec2 instance for testing purposes and at the same time Satyam also generated tf apply command for his ec2 instance at the same time, so now the problem occurs at the same time two developers are trying to write in the state file

Imagine a situation where you have multiple people working on the same infrastructure, how the security vulnerabilities it could cause and the amount of inconsistency it could cause to the state file

The solution for this problem is locking the state file, how do we implement is, our state file is stored in an s3 bucket, additionally we create a Lock ID which is associated with our state file and is also associated with the client that's making the request, the lock id works as the primary key because we store in dynamo db, If you know the role of the database, the primary key cannot be repeated it's very unique so that no other person at that particular time can perform any operation using the state file, once the operation is fully complete the lock is released and now other's can perform the operation

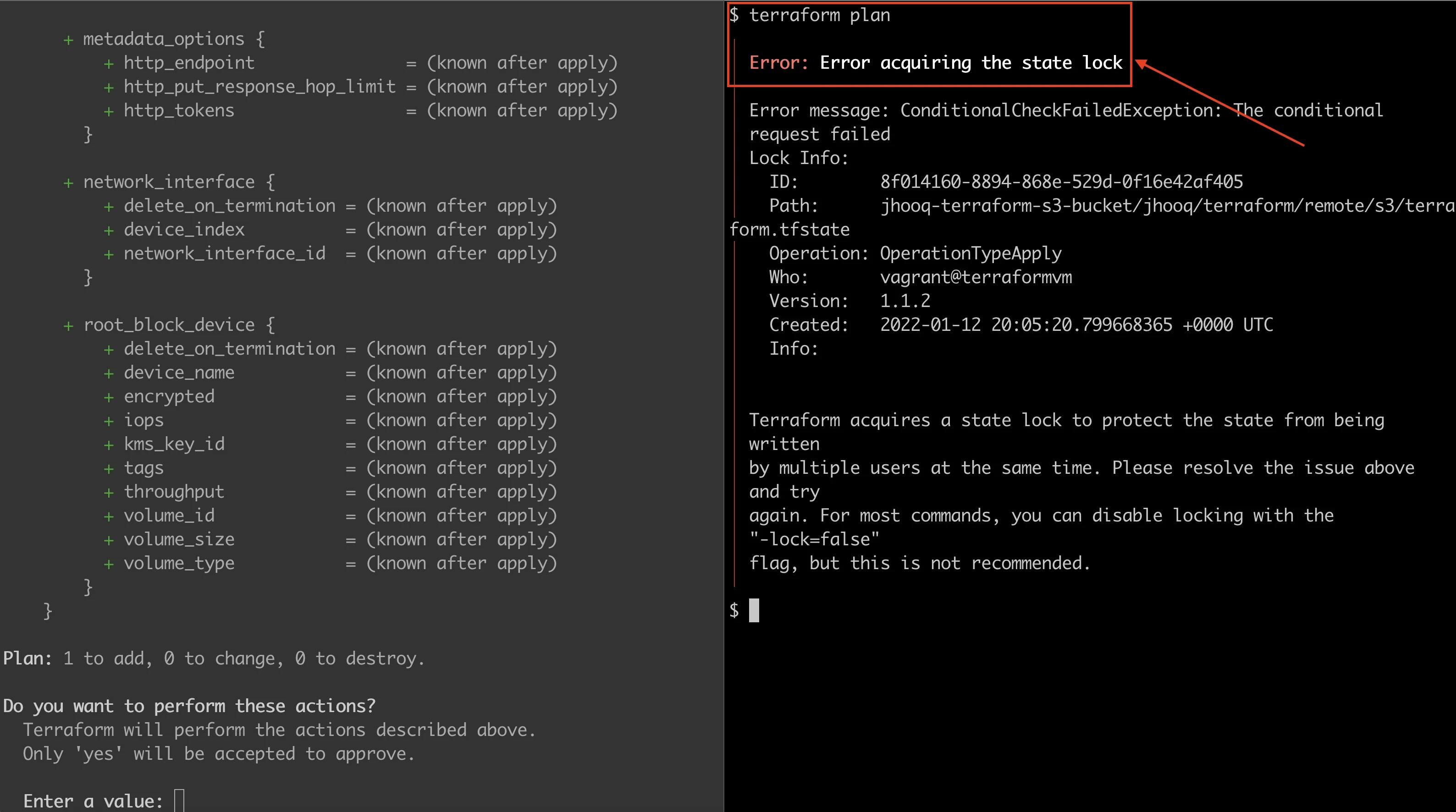

This is what the state lock error look's like 👆

well, Only the implementation with komiser is remaining and we will resume it in the next blog 👋

📍**Resources**:

Part4 Blog :

https://manogna.hashnode.dev/cloud-cost-monitoring-using-komiser-part-4

Part6 Blog :

Kubesimplify Github :

github.com/kubesimplify/cloudnative-lab

My Github :